brain of mat kelcey...

counting bees on a rasp pi with a conv net

May 17, 2018 at 12:30 PM | categories: projectscounting bees

the first thing i thought when we setup our bee hive was "i wonder how you could count the number of bees coming and going?"

after a little research i discovered it seems noone has a good non intrusive system for doing it yet. it can apparently be useful for all sorts of hive health checking.

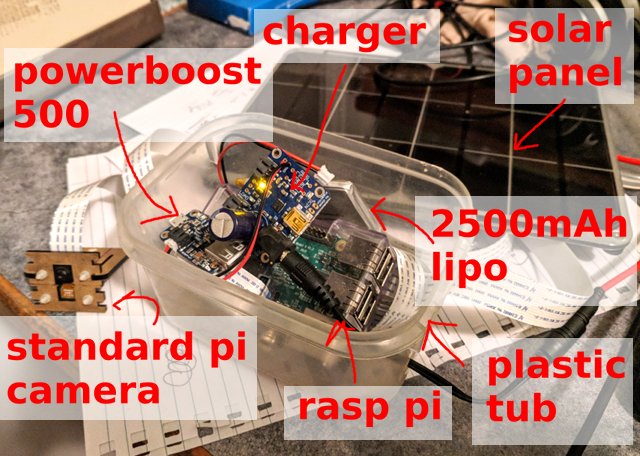

the first thing to do was collect some sample data. a raspberry pi, a standard pi camera and a solar panel is a pretty simple thing to get going and at 1 frame every 10 seconds you get 5,000+ images over a day (6am to 9pm).

here's an example image... how many bees can you count?

what exactly is the question?

the second thing was to decide exactly what i was trying to get the neural net to do. if the task is "count bees in an image" you could arguably try to regress directly to the number but it didn't feel like the easiest thing to start with and it doesn't allow any fun tracking of individual bees over frames. instead i decided to focus on localising every bee in the image.

a quick sanity check of an off the shelf single shot multi box detector didn't give great results. kinda not surprisingly, especially given the density of bees around the hive entrance. (protip: transfer learning is not the answer to everything) but that's ok; i have a super constrained image, only have 1 class to detect and don't actually care about a bounding box as such, just whether a bee is there or not. can we do something simpler?

v1: fully convolutional "bee / no bee" in a patch

my first quick experiment was a patch based "bee / no bee in image" detector. i.e. given an image patch what's the probability there is at least 1 bee in the image. doing this as a fully convolutional net on very small patches meant it could easily run on full res data. this approach kinda of worked but was failing for the the hive entrance where there is a much denser region of bees.

v2: RGB image -> black / white bitmap

i quickly realised this could easily be framed instead as an image to image translation problem. the input is the RGB camera image and the output is a single channel image where a "white" pixel denotes the center of a bee.

| RGB input (cropped) | single channel output (cropped) |

|

|

labelling

step three was labelling. it wasn't too hard to roll a little TkInter app for selecting / deselecting bees on an image and saving the results in a sqlite database. i spent quite a bit of time getting this tool right; anyone who's done a reasonable amount of hand labelling knows the difference it can make :/ we'll see later how luckily (as we'll see) having access to a lot of samples means i could get quite a good result with semi supervised approaches

the model

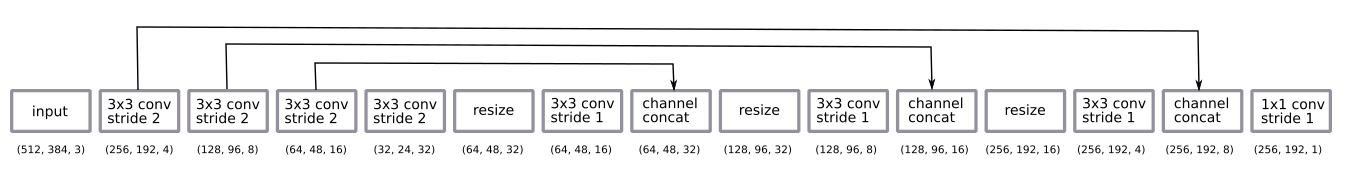

the architecture of the network is a very vanilla u-net.

- a fully convolutional network trained on half resolution patches but run against full resolution images

- encoding is a sequence of 4 3x3 convolutions with stride 2

- decoding is a sequence of nearest neighbours resizes + 3x3 convolution (stride 1) + skip connection from the encoders

- final layer is a 1x1 convolution (stride 1) with sigmoid activation (i.e. binary bee / no bee choice per pixel)

after some emperical experiments i chose to only decode back to half the resolution of the input. it was good enough.

i did the decoding using a nearest neighbour resize instead of a deconvolution pretty much out of habit.

the network was trained with Adam and it's small enough that batch norm didn't seem to help much. i was surprised how simple and how few filters i could get away with.

i applied the standard sort of data augmentation you'd expect; random rotation & image colour distortion. the patch based training approach means we inherently get a form of random cropping. i didn't flip the images since i've always got the camera on the same side of the hive.

one subtle aspect was the need to post process the output predictions. with a probabilistic output we get a blurry cloud around where bees might be. to convert this into a hard one-bee-one-pixel decision i added thresholding + connected components + centroid detection using the skimage measure module. this bit was hand rolled and tuned purely based on eyeballing results; it could totally be included in the end to end stack as a learnt component though. TODO :)

| input | raw model output | cluster centroids |

|

|

|

generalisation over days

over one day

my initial experiments were with images over a short period of a single day. it was very easy to get a model running extremely well on this data with a small number of labelled images (~30)

| day 1 sample 1 | day 1 sample 2 | day 1 sample 3 |  |

|

|

over many days

things got more complicated when i started to include longer periods over multiple days. one key aspect was the lighting difference (time of day, as well as different weather). another was that i was putting the camera out manually each day and just sticking it in kinda the same spot with blue tac. a third more surprising difference was that with grass growing the tops of dandelions look a lot like bees apparently (i.e. the first round of trained models hadn't seen this and when it appeared it was a constant false positive)

most of this was solved already by data augmentation and none of it was a show stopper. in general the data doesn't have much variation, which is great since that allows us to get away with a simple network and training scheme.

| day 1 sample | day 2 sample | day 3 sample |  |

|

|

an example of prediction

this image shows an example of the predictions. it's interesting to note this is a case where there were many more bees than any image i labelled, a great validation that the fully convolutional approach trained on smaller patches works.

it does ok across a range of detections; i imagine the lack of diversity in the background is a biiiiig help here and that running this network on some arbitrary hive wouldn't be anywhere near as good.

| high density around entrance | varying bee sizes | high speed bees! |  |

|

|

labelling tricks

semi supervised learning

the ability to get a large number of images makes this a great candidate for semi supervised learning.

a very simple approach of ...

- capture 10,000 images

- label 100 images & train

model_1 - use

model_1to label other 9,900 images - train

model_2with "labelled" 10,000

... results in a model_2 that does better than model_1.

here's an example. note that model_1 has some false positives (far left center & blade of grass) and some false negatives (bees around the hive entrance)

| model_1 | model_2 |

|

|

labelling by correcting a poor model

this kind of data is also a great example of when correcting a bad model is quicker than labelling from scratch...

- label 10 images & train model

- use model to label next 100 images

- use labelling tool to correct the labels of these 100 images

- retrain model with 110 images

- repeat ....

this is very common pattern i've seen and sometimes makes you need to rethink your labelling tool a bit..

counts over time

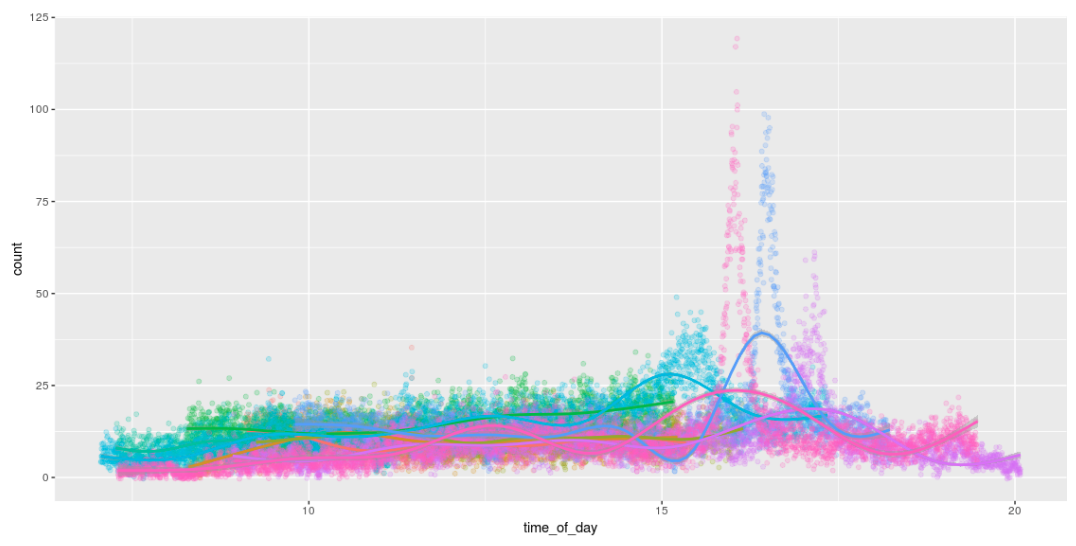

being able to locate bees means you can count them! this makes for fun graphs like this that show the number of bees over a day. i love how they all get busy and race home around 4pm :)

running inference on the pi

running a model for inference on the pi was a big part of this project.

directly on the pi hardware

the first baseline was to freeze the tensorflow graph and just run it directly on the pi. this works without any problem, it's just the pi can only do 1 image / second :/

running on a movidius neural compute stick

i was very excited about the possibility of getting this model to run on the pi using a movidus neural compute stick. they are an awesome bit of kit.

sadly it doesn't work :/ since their API to convert from a tensorflow graph to their internal model format doesn't support the way i was doing decoding so i had to convert upsizing from using nearest neighbours resampling to using a deconvolution. that's not a big deal, except it just doesn't work. there are a bunch of little problems i've got bug reproduction cases for. once they are fixed i can revisit...

[update sep 2018] after lots of hoop jumping actually did get things running! hooray. see this issue for some further info.

v3 model: RGB image -> bee count

this led me to the third version of my model; can we regress directly from the RGB input to a count of the bees? if we do this we can avoid any problems relating to unsupported ops & kernel bugs on the neural compute stick, though it's unlikely this will be as good as the centroids approach of v2.

i was originally wary of trying this since i expected it would take a lot more labelling (it's no longer a patch based system) however! given a model that does pretty well at locating them, and a lot of unlabelled data, we can make a pretty good synthetic dataset by applying the v2 rgb image -> bee location model and just counting the number of detections.

this model was pretty easy to train and gives reasonable results... (though it's not as good as just counting the centroids detected by the v2 model)

| sample actual vs predicted for some test data | ||||||||||||

| actual | 40 | 19 | 16 | 15 | 13 | 12 | 11 | 10 | 8 | 7 | 6 | 4 |

| v2 (centroids) predicted | 39 | 19 | 16 | 13 | 13 | 14 | 11 | 8 | 8 | 7 | 6 | 4 |

| v3 (raw count) predicted | 33.1 | 15.3 | 12.3 | 12.5 | 13.3 | 10.4 | 9.3 | 8.7 | 6.3 | 7.1 | 5.9 | 4.2 |

... but unfortunately still doesn't work on the NCS. (it runs, i just can't get it to give anything but random results). i've generated some more bug reproduction cases and again will come back to it... eventually...

next steps?

as aways there's still a million things to tinker with...

- get the entire thing ported to the je vois embedded camera i've done a bit of tinkering with this but wanted to have the NCS working as a baseline first. i want 120fps bee detection!!!

- tracking bees over multiple frames / with multiple cameras for optical flow visualisation

- more detailed study of benefit of semi supervised approach and training a larger model to label for a smaller model

- investigate power usage of the NCS; how to factor that into hyperparam tuning?

- switch to building a small version of farm.bot for doing some cnc controlled seedling genetic experiments (i.e. something completely different)

code

all the code for this is on github