brain of mat kelcey...

visualising the consistent hash

September 26, 2010 at 04:00 PM | categories: Uncategorizedthe resource allocation problem

consider the problem of allocating N resources across M servers (N >> M)

modulo hash

a common approach is the straight forward modulo hash...

if we have 4 servers;

servers = [server0, server1, server2, server3]we can allocate a resource to a server by simply

- hashing the resource

hash(resource) = 54

- reducing modulo 4

54 % 4 = 2

- allocating to that numbered server

servers[2] = server2

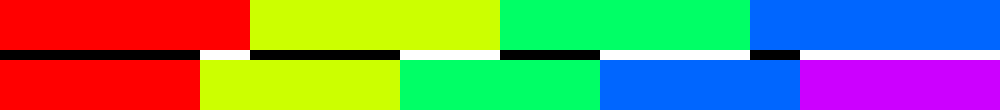

we can visualise how this scheme maps resources to servers by allocating a colour to each server; server0 server1 server2 server3 and, assuming we are hashing to a value between 0 and 99, draw the following chart ...

... where the colour of the nth column represents which server a resource hashing to n would be allocated to.

... where the colour of the nth column represents which server a resource hashing to n would be allocated to.

this hashing scheme is nice for a couple of reasons

- it's very simple

- it allocates resources evenly across the servers (assuming you have a good hashing function)

however it has one big drawback; what happens when you change the number of servers? say for example that due to extra load we have to add another server; server4

switching from modulo 4 to modulo 5 means that a resource that used to hash to server2 ...

54 % 4 = 2now hashs to server4 ...

54 % 5 = 4

in fact if we compare the difference in the hashing we get the following ...

... where the top bar represents the allocation with 4 servers the bottom bar represents the allocation with 5 servers, with white areas between representing cases of a resource changing which server is was allocated to.

this is pretty bad in terms of reallocation; a whooping 80% of the resources have changed which server they are assigned to.

divisor hash

how about instead of modulo arithmetic we try divisor instead?

considering 4 servers again we allocate a resource by

- hashing the resource as before

hash(resource) = 54

- reducing divisor 25 (25=100/4; ie hash max / number servers)

54 / (100/4) = 2

- allocating to that numbered server

servers[2] = server2

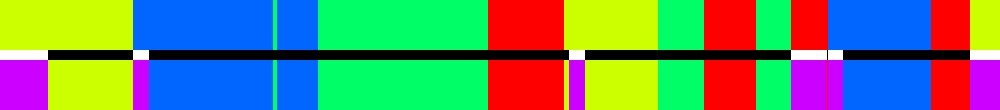

as before we can visualise how this scheme maps resources to servers by again allocating a colour to each server; server0 server1 server2 server3 and, assuming we are hashing to a value between 0 and 99, draw the following chart ...

again if we get a 5th server server4 we can see how the resources are reallocated ...

this time we only 50% reallocation, instead of 80%, so that's an improvement. we also continue to spread the resources evenly across the servers which is great.

but of course, we can do better!

consistent hash

in a consistent hash we associate ranges of the hash space to servers by hashing the servers themselves.

starting with 4 servers we can hash them (by name, eg 'server0') into the range 0 to 90107 (a smallish prime) giving ... server0 => 67981, server1 => 24530, server2 => 71186, server3 => 27735

... which can be converted into the ranges ... server1 => (0, 24530), server3 => (24531, 27735) server0 => (27736, 67981), server2 => (67982, 71186), server1 => (71186, 90106),

visually represented as ...

allocation of a resource to a server is simply done now by hashing the resource and see which range it falls into.

adding a 5th server is a done by hashing the new server; eg server4 => 74391 and adjusting the ranges.

we can see how this scheme ensures that as many resources as possible retain their original server allocation.

however there's a pretty obvious problem; where as the previous methods divided the hash space evenly this method is way off.

we'd like the ratios to be 0.25 for the 4 server case and 0.20 for the 5 server case; but instead they are server0 => 0.44, server1 => 0.48, server2 => 0.04, server3 => 0.04 and server0 => 0.44, server1 => 0.44, server2 => 0.04, server3 => 0.04, server4 => 0.04

luckily there's a pretty simple fix; simply hash each server multiple times!

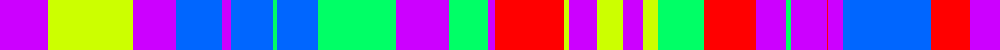

if we hash each server 5 times, using 5 different hash functions, we get the following allocations

which are this time much closer to being even; server0 => 0.20, server1 => 0.26, server2 => 0.26, server3 => 0.28 and server0 => 0.17, server1 => 0.19, server2 => 0.21, server3 => 0.24, server4 => 0.18

and the more times we hash the closer we get to an even allocation. yay! we get the best of both worlds; an even allocation and the minimum amount of reallocation as the number of servers change.

there's one final trick that can be done with a consistent hash. turns out we don't have to give the same number of slots to each server

starting with an even allocation ...

we might decide to get server4 twice the number of slots that the others have ...

this results in an uneven allocation of ... server0 => 0.16, server1 => 0.13, server2 => 0.17, server3 => 0.20, server4 => 0.34

why would we want to have a non even allocation? a couple of reasons i could think of are..

- a server with twice the grunt could get handle twice the load so should get twice the slots

- it's an interesting way to handle a/b testing; introduce a new server by slowing 'dialing' up it's slots

interesting stuff!

all the code used to generate the images for this page are available on github

26th september 2010